AI compliance in the United States: from state laws to defense contracts and automotive safety

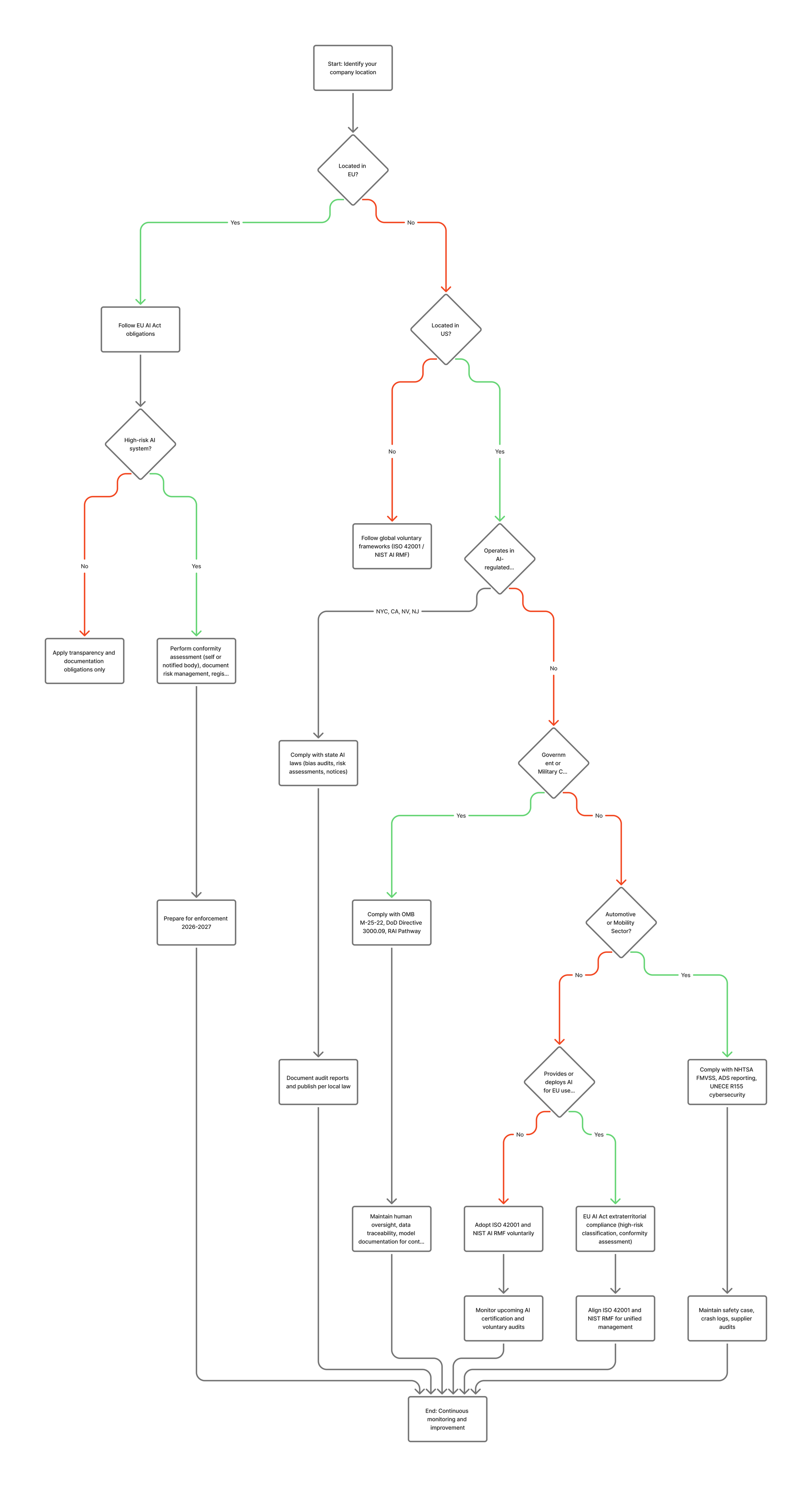

The downloadable PDF and the decision tree chart are at the end of this article

The United States doesn’t yet have an EU-style Artificial Intelligence Act, but that doesn’t mean AI is unregulated.

Across states, federal agencies, and key industries like defense and automotive, a growing patchwork of enforceable rules already governs how AI may be developed, tested, procured, and deployed.

While many of these rules are sector-specific and contract-driven rather than certification-based, together they form the first generation of U.S. AI oversight.

1. The first layer: state and local AI laws

Although federal AI legislation is still under discussion, several states and municipalities have enacted binding requirements—particularly where AI directly impacts employment, privacy, or consumer rights.

| Jurisdiction | Regulation | Key obligations | Enforcement status |

|---|---|---|---|

| New York City (Local Law 144) | Effective July 5 2023 | Employers using “automated employment decision tools” (AEDTs) must commission an annual independent bias audit, publicly post the results, and give notice to applicants. | Active enforcement; city’s Department of Consumer and Worker Protection can issue fines. |

| California (ADMT under CCPA) | Becomes enforceable Jan 1 2027 | Risk assessment and consumer notification required for automated decision-making technologies used in high-impact areas such as finance, hiring, or health. | Rule finalized, delayed enforcement until 2027; CCPA penalties apply. |

| Nevada Assembly Bill 406 | Effective July 1 2025 | Restricts AI use in mental/behavioral-health diagnosis or treatment unless under licensed programs with oversight. | Will be enforced by state health regulators. |

| New Jersey Bill S2964/A3855 | Pending | Would mandate independent bias audits of automated hiring tools; vendors must provide audit results to clients. | Expected 2026 vote. |

What this means in practice

Companies using AI for employment screening, financial decisions, or healthcare applications in these jurisdictions already have enforceable duties:

- keep auditable documentation,

- perform bias testing (usually via an independent third party), and

- publish audit results or provide them to regulators on request.

This is the first taste of mandatory AI audits in the United States—even before a federal framework exists.

2. Federal obligations: executive guidance and agency frameworks

At the national level, AI oversight is currently driven by executive orders and agency-specific memoranda rather than a single law.

Executive Order 14110 (Oct 2023)

This set the direction: requiring federal agencies to ensure AI use does not violate rights or safety and to develop standards for testing, red-teaming, and transparency.

OMB M-24-10 and M-25-22 (2024-2025)

- Every agency must appoint a Chief AI Officer and inventory all AI systems.

- Agencies must implement minimum risk-management practices and apply AI-specific procurement clauses in contracts.

- The guidance covers three categories:

- General AI (productivity, analysis, customer-service tools)

- Generative AI & biometrics (chatbots, voice synthesis, image generation)

- Rights- or safety-impacting AI (decision systems in healthcare, policing, transportation)

For vendors, this means any contract to deliver AI-enabled solutions to a federal agency now comes with documentation, transparency, and audit obligations.

3. Military & defense contractors

Defense programs have had AI-related controls far longer than civilian ones.

The Department of Defense (DoD) governs AI through a combination of policy, ethics directives, and procurement language.

DoD Directive 3000.09 — Autonomy in weapon systems

- Requires “appropriate levels of human judgment over the use of force.”

- Demands verification & validation (V&V), safety assurance, cybersecurity, and traceability for any autonomous system.

- Contractors building or integrating AI into weapons or command systems must provide evidence of these controls.

Responsible AI Strategy & Implementation Pathway (RAI SIP)

- Establishes governance, testing, data-quality, and workforce training across DoD programs.

- Flowed down through the Chief Digital and AI Office (CDAO) into solicitations.

- Contractors must maintain traceability of data and model decisions, demonstrate human-in-the-loop mechanisms, and comply with continuous monitoring expectations.

AI-specific clauses in procurement

Typical deliverables now include:

- Model documentation (training data lineage, evaluation methods, bias reports)

- Operational oversight plans (who reviews AI outputs, escalation process)

- Incident-response and security procedures

- Rights in data clauses ensuring DoD access to underlying code or data if needed for mission continuity.

For smaller vendors, these clauses act as a de facto certification system: you cannot win or keep a DoD AI contract unless your internal controls match these expectations.

4. Automotive & mobility sector

The U.S. automotive industry is governed through safety law, not AI law—but AI is now integral to vehicle safety.

Federal layer: NHTSA oversight

- The National Highway Traffic Safety Administration (NHTSA) enforces the Federal Motor Vehicle Safety Standards (FMVSS).

- Manufacturers must self-certify compliance with FMVSS before vehicles are sold.

- The Standing General Order 2021-01 (amended 2023 & 2025) requires mandatory crash/incident reporting for any vehicle using Automated Driving Systems (ADS) or Level 2 ADAS.

- Reports must include sensor data, system logs, and preliminary cause assessments.

State layer: California example

- CA DMV requires testing permits, disengagement reports, and collision reports within 10 days.

- CPUC demands data on AV “stoppage” or public-safety interference incidents.

Industry & international influence

- The SAE J3016 levels of automation define what counts as Level 0-5 and guide design expectations.

- Global OEMs align with UNECE WP.29 R155 (Cybersecurity Management System) even for U.S. models, driving supplier audits on patching, vulnerability management, and threat analysis (TARA).

In short: automotive AI compliance today is a hybrid of self-certification, mandatory incident reporting, and global OEM audits—not yet a formal government-issued “AI certificate,” but every bit as binding in practice.

5. Self-assessment, audits, and third-party verification — how enforcement works

| Sector | Who verifies compliance | Typical mechanism | Notes |

|---|---|---|---|

| Federal civilian agencies | Internal compliance + potential Inspector General audit | Self-assessment with required documentation; agencies may audit vendors post-award. | No centralized “AI certification,” but OMB memos allow third-party evaluation clauses. |

| Defense contractors | Contracting Officer + DoD program office (CDAO/RAI) | Hybrid: internal V&V + external evaluation when risk or sensitivity is high (similar to CMMC). | DoD encourages independent test labs for autonomous systems. |

| Automotive | NHTSA & state regulators | Self-certification to FMVSS + mandatory reporting; OEMs conduct supplier audits. | Non-compliance leads to recalls, civil penalties, or permit loss. |

| State employment/consumer AI laws | Local regulators (e.g., NYC DCWP) | Third-party bias audit required by law. | First legally mandated external AI audits in U.S. |

So, in the U.S. system:

- Low/medium-risk AI → self-assessment, record-keeping, internal QA.

- High-risk or contractually sensitive AI → external or third-party review.

- Safety-critical or rights-impacting AI → mandatory incident reporting or independent audit.

6. How this appears in real contract language

Federal and industry templates already embed AI-specific clauses:

Example 1 – Federal procurement (OMB M-24-18 sample)

“The Contractor shall provide sufficient documentation of the AI system, including data sources, training methods, and evaluation results, to allow the Agency to assess compliance with applicable standards and to perform audits or independent verification as required.”

Example 2 – Supplier audit clause (adapted)

“Supplier shall permit the Customer or its authorized third party to audit compliance with AI risk-management requirements, including review of model training data, evaluation records, and change logs.”

Example 3 – AI disclosure clause (state government)

“Seller shall disclose any Products or Services that utilize Artificial Intelligence and shall not employ such functionality without written authorization of the State and implementation of agreed safeguards.”

Example 4 – Automotive quality addendum

“Supplier shall maintain documentation demonstrating compliance with cybersecurity and AI-function validation requirements equivalent to UNECE R155 and provide access for customer audit upon request.”

Such language bridges current voluntary frameworks (like NIST AI RMF) with contractually enforceable obligations.

7. What companies should do now

Even without a single national AI-certification regime, the compliance trend is clear.

Organizations developing or deploying AI in sensitive contexts should:

- Adopt the NIST AI Risk Management Framework (AI RMF) to structure internal governance (Govern → Map → Measure → Manage).

- Implement ISO 42001 (AI Management System) for an auditable layer of governance—this is the bridge between voluntary and mandatory.

- Maintain a “technical file”: model cards, data-provenance maps, evaluation results, limitations, human-oversight logs, incident records.

- Prepare for audits—even if they’re not yet required. When they arrive (state, agency, or OEM), evidence will already exist.

- Monitor contract terms: expect to see clauses on transparency, data access, human oversight, and independent evaluation.

8. Key takeaway

The U.S. may not have a national AI certification law yet, but AI oversight is already real:

- States are auditing bias.

- Agencies are rewriting procurement contracts.

- The DoD demands human oversight and traceability.

- Automakers must self-certify safety and report incidents.

Each domain uses its own mechanism—self-assessment, audit clause, or third-party verification—but they all point toward a single future:

AI governance that is risk-based, documented, testable, and ready for external inspection.

I created this AI Compliance Decision Tree & Checklist to help you evaluate your own company — and your suppliers or vendors — for the AI governance steps required under current and upcoming laws.

The goal is simple: to give you a practical, risk-based pathway for identifying what rules apply, which standards you can adopt voluntarily, and how to prepare documentation before full enforcement begins.

By following this tree, you can determine:

- Whether your organization or suppliers are affected by the EU AI Act, U.S. state-level AI regulations, or sector-specific requirements (such as government contracting, defense, or automotive).

- What level of assessment applies — from internal self-assessment to independent third-party audits.

- Which frameworks (ISO 42001, NIST AI RMF) best support readiness for both European and U.S. markets.

This tool is designed as both a diagnostic map and a compliance register — enabling you to choose the right governance pathway, document your risk management practices, and stay ahead of emerging AI certification and audit requirements worldwide.